This piece was originally published in Just Security.

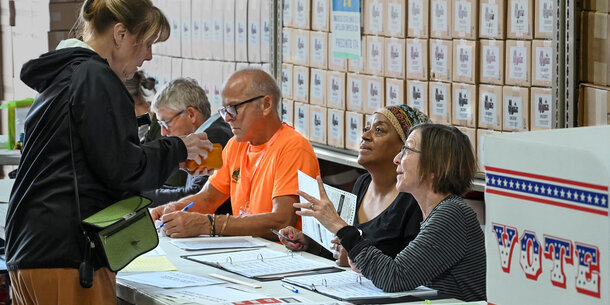

In early December 2020, Ruby Freeman received an email: “We are coming for you and your family. Ms. Ruby, the safest place for you right now is in prison. Or you will swing from trees.”

Freeman had been a temporary poll worker in Fulton County, Georgia, in the 2020 election. Her daughter, Shaye Moss, was with her at the State Farm Arena in Atlanta, counting ballots as a county employee on the night of the election. Footage of the pair engaging in routine vote-counting procedures went viral after Rudy Giuliani and his legal team began to falsely claim it showed them conducting election fraud. As the lies about what happened that night in Atlanta spread on social media, Freeman and Moss were thrown into a firestorm. Racist, violent language arrived via letters, texts, phone calls, emails, social media messages, and even in person at each of their front doors.

Freeman and Moss’ lives were forever changed. “I miss my old neighborhood because I was me,” Freeman said as she testified in a subsequent defamation trial against Giuliani about the damage to her reputation. “I could introduce myself. Now I just don’t have a name.”

The two women are among thousands of election workers who have faced threats, intimidation, and abuse as a result of the election lies that have gained traction in recent years. More broadly, the spread of false election information has eroded cornerstones of American democracy, contributing to flagging confidence in American elections, disenfranchisement of voters, and massive turnover among election workers.

As destructive as these false election narratives have been, there is good reason to believe the problem may be markedly worse in 2024. Five factors are poised to degrade the election information environment even further compared with 2020: 1) right-wing legal and political attacks have successfully deterred key institutions, organizations, and government agencies from addressing falsehoods about the election process; 2) domestic actors appear increasingly robust and coordinated in their broader efforts to undermine confidence in American elections, too; 3) social media companies have drastically reduced efforts to moderate false election content and amplify accurate information; (4) a convergence of recent geopolitical crises seem set to galvanize renewed interest from foreign adversaries such as Russia, China, and Iran to interfere in U.S. elections; and (5) recent advances in artificial intelligence mean adversaries of American democracy have access to tools that can boost voter suppression efforts and pollute the information environment on a scale and level of sophistication never seen before in a federal election cycle, though AI may also help address these threats.

It doesn’t have to be quite this bad. Governments, election officials, the media, tech companies, and civil society organizations can take steps to stem the tide of false election narratives.

Right-Wing Efforts to Degrade Defenses Against Disinformation

At the center of the problem is a years-long political and legal effort to dismantle the human and institutional networks that have documented and pushed back on election disinformation in prior election cycles. A pivotal episode in this push began in 2022, when the attorneys general of Missouri and Louisiana, along with a number of private plaintiffs, sued numerous Biden administration agencies and officials with the aim of stifling cross-sector collaboration to address online falsehoods. The lawsuit alleged that the White House, agencies, and officials coordinated “with social media giants,” and certain nonprofits and academic institutions, to censor and suppress speech related to, among other things “election integrity.” That case, now styled Murthy v. Missouri, is currently before the Supreme Court.

The lawsuit has caused serious confusion within federal agencies about how to communicate with social media platforms about foreign and domestic threats to U.S. elections. An extraordinary ruling by the Fifth Circuit Court of Appeals last fall upheld much of a lower court order that restricted the Biden administration’s communication with social media companies. While the Supreme Court has stayed the order for the moment — and an array of legal scholars and practitioners have panned the flawed judgments of the lower courts — many government offices tasked with combatting foreign disinformation campaigns appear deeply skittish and unsure whether or how to continue their past work. In November, the Washington Post reported that federal agencies have “stopped warning some social networks about foreign disinformation campaigns on their platforms, reversing a years-long approach to preventing Russia and other [nation-state] actors from interfering in American politics” with less than a year to go before Election Day.

The lawsuit also has had a chilling effect on researchers, some of whom may fear being wrongly perceived as exerting improper influence on behalf of the government. The district court judge who heard the case initially enjoined federal officials from working with some of those third-party researchers, though that portion of the injunction was later reversed.

For researchers, election officials, and civil servants, a parallel investigation by Republican members of the U.S. House has had a perhaps even greater chilling effect. Over the past year, Ohio Representative Jim Jordan’s Select Subcommittee on the Weaponization of the Federal Government has made such individuals and organizations the target of voluminous information requests and subpoenas. (Congress enjoys relatively broad – though not unconstrained – authority to issue subpoenas to private parties in aid of its legislative functions, according to Supreme Court precedent.) The committee has subpoenaed government agencies, for example, to investigate messages between tech companies and the administration, accusing the Biden administration government of silencing right-wing voices. A committee report published last year spuriously labels communication between disinformation researchers and social media platforms the “newly emerging censorship-industrial complex.”

Alex Stamos, former director of one of the leading election-disinformation tracking operations, the Stanford Internet Observatory, noted that “since this investigation has cost the university now approaching seven [figure] legal fees, it’s been pretty successful, I think, in discouraging us from making it worthwhile for us to do a study in 2024.” At the same time, the State Department’s Global Engagement Center, tasked with combatting foreign propaganda, has also been targeted by Jordan’s committee, which called for its defunding. The New York Times has noted that a right-wing advocacy group, helmed by former Trump advisor during his term in office Stephen Miller, has filed a class-action lawsuit “that echoes many of the committee’s accusations and focuses on some of the same defendants,” including researchers at Stanford, the University of Washington, the Atlantic Council, and the German Marshall Fund.

This menacing atmosphere affects local election offices too. For example, one election official in Florida recently said he can no longer speak to constituents about his work with the Elections Infrastructure Information Sharing & Analysis Center (EI-ISAC) — an information-sharing network for election officials that sometimes addresses falsehoods about the voting process — or even use the word “misinformation” out of fear of backlash.

Social Media Companies Retreat from Content Moderation

Amid such intimidation of those seeking to fight false election information, major social media companies have dramatically cut back on staff resources devoted to addressing these issues. Teams responsible for “trust and safety,” “integrity,” “content moderation,” “AI ethics,” and “responsible innovation” were ravaged by a series of layoffs beginning in late 2022. Despite their often-fractious relationship, in June 2023, Mark Zuckerberg praised Elon Musk’s efforts to “make Twitter a lot leaner,” after Musk cut roughly 80 percent of the company’s staff. Some companies are choosing to partially or entirely outsource this type of work, which has led to startups offering trust and safety as a service to the same companies that made these deep cuts — often without significant access to or influence over core product design choices that exacerbate the spread of election disinformation and other troubling content. While cuts to civic responsibility teams seem to have had the short-term impact of reducing corporate costs, two former Facebook integrity workers argue that the long-term effects will be “unbearably high,” both for the companies themselves and for society at large, by intensifying the volume of toxic content on the platforms and making platforms less useful and rewarding for users. Unfortunately, even if the companies eventually rebuild these teams, it is unlikely to happen in time for elections in 2024.

The fracturing of the social media environment poses additional challenges. As Twitter sheds users by the month and Facebook loses relevance for younger users, a proliferation of new platforms in the past few years seeks to fill the gaps. TikTok is the most notable success story in terms of attracting users, but the growth of numerous other platforms — including Parler, Gab, Truth Social, Mastodon, Bluesky, Substack, Telegram, Threads, and Signal — is evidence that fragmentation is real. That fragmentation can have benefits: some of these new platforms are attempting to be interoperable with one another, which, as with email, could give people more options for how to engage online and in the longer term disrupt the entrenched behemoths of the sector. But it also makes it even more difficult for researchers to track online disinformation campaigns. And of course, some of these platforms may be deliberately providing a haven for purveyors of disinformation and hate speech.

Encrypted one-to-one or group messaging platforms like WhatsApp, Telegram, iMessage, and Signal are perhaps the most challenging forums to address when it comes to election disinformation. Because some or all of the content on these platforms is encrypted — that is, it is protected against unauthorized access — the companies that operate them have limited visibility into disinformation campaigns spreading in their own backyards. One tool available to encrypted messaging platforms — acting in response to prohibited content reported by users — is likely underutilized. Given the platforms’ lack of transparency, though, external researchers have a poor understanding of the frequency or effectiveness of enforcement efforts. For example, while Meta releases quarterly Community Standards Enforcement Reports that include details from its Facebook and Instagram platforms, WhatsApp (also owned by Meta) is conspicuously absent from those reports. Telegram was the subject of a recent exposé describing how the platform has a long history of allowing violent extremist groups to persist on its platform, despite having the ability to remove these groups.

With the incredible power of personalization and conversation afforded by AI systems, it is not hard to imagine how chatbots could be surreptitiously deployed on encrypted platforms to conduct mass influence operations designed to sway election outcomes or undermine trust in legitimate outcomes. Similar types of covert persuasion campaigns have been taking place in Brazil since 2018 — and have also had notable impacts on Latino and Indian communities in the United States in particular. Among those populations, WhatsApp is particularly popular — albeit without the large-scale personalization made possible by generative AI tools.

New Threats of Foreign Interference

Meanwhile, the threat of foreign influence campaigns has only grown since the 2020 election. With the continuation of Russia’s war on Ukraine, growing tensions in the Middle East amid the war between Israel and Hamas, and increased friction between the United States and China, foreign powers have considerable interests in the outcome of the 2024 election. The Department of Homeland Security, the National Intelligence Council, and major tech companies have warned since 2022 that Russia, Iran, and China are all likely to launch interference efforts in 2024, with Russia as the “most committed and capable threat” to American elections, according to Microsoft.

These campaigns have already begun. In November, Meta found that thousands of Facebook accounts based in China were impersonating Americans and posting about polarizing political topics. Meta’s most recent adversarial threat report noted that the company had already identified and removed multiple foreign networks of fake accounts originating in China and Russia, and highlighted that similar networks pose an ongoing threat to the information environment. Chris Krebs, the former head of the U.S. government’s Cybersecurity and Infrastructure Security Agency (CISA), has warned that foreign cybersecurity threats will be “very, very active” this election cycle.

Stronger Domestic Networks of Election Deniers

At the same time, the domestic networks used to spread false information and undermine confidence in American elections have, if anything, strengthened. A conglomeration of right-wing organizations called the Election Integrity Network, led by election denier and Trump lawyer Cleta Mitchell, encourages people to “research” their local election offices to identify supposedly unsavory influences, hunt for voter fraud by signing up as poll watchers, and perform amateur voter roll list maintenance. The group claims more than 20 right-wing organizations as partners, ranging from small policy organizations to more well-known entities like the Heritage Foundation.

A large number of changes in election procedures in recent years also risk breeding more election falsehoods. Since the 2020 presidential election, several laws — in Texas, Florida, Georgia, South Dakota, and elsewhere — have expanded poll observer powers by offering watchers more latitude or instituting vaguely defined criminal prohibitions targeted against officials who do not give watchers ample observation access. While poll watchers play an important transparency function in elections, prominent election deniers have encouraged some watchers to wrongheadedly perceive their mission as undermining confidence in election integrity and confirming a baseless belief that widespread election fraud occurs in American elections. In the 2020 election, for instance, unreliable poll watcher “testimony” provided fodder for numerous meritless legal challenges to election results that were universally rejected by courts or otherwise failed.

AI Will Amplify Threats

Whether foreign or domestic, adversaries of American democracy also now have easy access to tools that can further pollute the information environment related to the 2024 election with far fewer resources than in the past. Candidates and activists have already used AI to create numerous digital fakeries — from deepfakes spread by Ron DeSantis’s presidential campaign depicting Trump embracing Dr. Anthony Fauci, a respected former top health official derided by some Republicans, to a Republican National Committee video showing a series of dystopian calamities playing out in another Biden presidential term. In another case, a likely deepfake — digitally created content that appears authentic — of a Chicago mayoral candidate glibly discussing police brutality circulated on the eve of the city’s primary election earlier this year.

Beyond the spread of deepfakes to influence vote choices, AI can be exploited to spoof election websites, impersonate election officials, manufacture crises at polling centers, and cultivate the impression that more people believe false narratives about the election process than actually do. Malevolent actors can use tools underlying generative AI chatbots to automate conversations with voters that are designed to deceive, and they can do so at scale. Conversely, candidates who wish to avoid accountability for their documented actions could also exploit widespread cynicism and distrust in content authenticity spawned by the proliferation of generative AI to possibly get away with smearing such documentation as fake.

AI could also be used to fuel disinformation by powering frivolous voter challenges and purges premised on election denial in 2024. In the 2022 midterm elections, coteries of activists combed through voter registration records and other miscellaneous data sources to bolster baseless claims of contests marred by widespread fraud. On the basis of partial and incomplete evidence, numerous activists lodged systematic challenges to tens of thousands of voters’ eligibility and promoted the results to seed doubt about the integrity of U.S. elections. One group in Georgia challenged the eligibility of 37,000 voters in a county with about 562,000 registered voters, forcing the county to validate the status of thousands of voters in the months before the election. In some cases, activists were mobilized by well-funded and coordinated national organizations spearheaded by the Election Integrity Network. That network is now testing a flawed AI tool — EagleAI — to match data more swiftly and allow users to fill out voter challenge forms with only a few clicks. EagleAI is riddled with deficiencies, relying on sources that may not be accurate or up-to-date for the purpose of determining voter eligibility.

Yet, the product is not only being offered to activists to make challenges. EagleAI is also being marketed to county election officials to flag voters for voter purges. At least one county in Georgia recently approved use of EagleAI to help maintain its voter rolls. These tactics — worsened by AI — threaten to disenfranchise voters and operate as a force multiplier for false information about the election process. As Georgia’s elections director recently put it, “EagleAI draws inaccurate conclusions and then presents them as if they are evidence of wrongdoing.”

Steps to a Better Information Environment

The new challenges to the information environment for elections require a multifaceted response from media, election officials, tech companies, the courts, and policymakers. This is a moment in which almost anyone can pitch in to defeat the election lies that have been so destructive in recent years, and provide Americans with accurate information about voting and elections. The following outlines some of the steps that should be taken now, for this election season, by U.S. elections officials, social media platforms, AI companies, and the news media (which are of course critical to ensuring the public has accurate information), and measures that federal and state governments should take in the longer term.

Elections officials should:

- Double down on cybersecurity best practices, such as transitioning to .gov domains to lessen the potential influence of websites spoofed through AI or other methods, because the .gov domain is administered by CISA and made available only to “U.S.-based government organizations and publicly controlled entities,” unlike other top-level domains such as .com that anyone can register for a fee.

- Conduct affirmative, well-timed voter-education campaigns that address common false narratives about the election process.

- Be prepared to nimbly adapt to novel scenarios by expanding their network of civil society partners and messengers to disseminate accurate information in the event of unexpected developments in the election process.

- Familiarize themselves with resources that will allow them to prepare for the increased security threats from AI.

Social media platforms should:

- Reverse the backsliding on disinformation and election security. Given the recent cuts, it’s hard to see them taking this step absent substantial public and political pressure, but they should bolster their trust and safety, integrity, and election teams, so that the teams can better handle the onslaught of election-related threats coming this year in the United States and abroad.

- Maintain meaningful account-verification systems, particularly for government officials and news organizations.

- Address deepfakes and crack down on coordinated bots and AI-generated websites peddling phony “news.”

- Ensure that moderation teams dedicated to non-English languages are adequately staffed and that content that would be flagged in English is also flagged in other languages. There is substantial evidence that major social media companies have failed language minorities in the United States, particularly when it comes to curbing misinformation and providing accurate election information. Major companies such as Meta typically devote fewer resources to monitoring American elections content in minority languages and rules-violating content that is addressed in English is sometimes left up, or left up longer, in languages such as Spanish.

AI companies should:

- Make good urgently on commitments that they have publicly agreed to since a July White House meeting, which include deploying “mechanisms that enable users to understand if audio or visual content is AI-generated.” Adobe, Google, and OpenAI seem to be the companies taking this commitment most seriously, but they can’t succeed without the whole ecosystem getting on board. First, all generative AI companies should urgently add difficult-to-remove watermarks to their AI-generated content, something that has already been implemented by Google Deepmind in their SynthID product.

- Coalesce, with the tech industry, around the Coalition for Content Provenance and Authenticity’s (C2PA) open standard for embedding content-provenance information in both authentic and synthetic content. Hundreds of major companies have already signed onto this standard, including Microsoft, Intel, Sony, and Qualcomm, but critically and conspicuously absent are Apple, ByteDance, and X, which have only said they’re considering it but haven’t explained the delay. Without these keystone players joining the coalition, the effort is meaningless.

- Transparently announce commitments to election security, similar to the list of pledges shared recently by OpenAI, including announcing (but not yet releasing) a “classifier” that the company says has the potential to identify what images were produced by its generative AI systems, and adoption of the C2PA standard. All AI companies — and social media platforms, for that matter, should make similar pledges, either because they already publicly signed onto these efforts at the White House, or because they may be mandated to do so soon anyway under European laws such as the EU AI Act and Digital Services Act, which currently has limited requirements. News reports indicate that several social media and tech companies (not including X) may announce an agreement at this weekend’s Munich Security Conference detailing commitments to reducing election-related risks from AI. The devil will be in the details, as well as in their actual execution of those commitments. (During the Munich event after original publication on Just Security, X did join in making that commitment, in what appears to be a good step by these companies.)

- Further fine-tune models and limit interfaces to bar the impersonation of candidates and officials and the spread of content that risks vote suppression, and ensure that third-party developers using their tools and services abide by the same rules. OpenAI’s recent suspension of the third-party developer of the Dean Phillips presidential campaign bot is a good example of this.

- Halt development and distribution of “open source” AI systems in generative AI companies like Meta and Stability AI — systems that currently allow operators to fairly easily disable all of their safety mechanisms — until they can address the ability of users to utilize their systems for purposes that are inappropriate, including to spread election lies and conspiracy theories with deepfakes, chatbots, and other AI tools.

News media and journalists should:

- Cultivate authoritative sources on elections, including election officials, so that when sensational stories take hold, they can quickly verify or rebut information circulating in their communities.

- Publish pre-election stories on any confusing or new topics — the idea of “pre-bunking” or getting ahead of potential targets of disinformation, for example. That should include providing voters with accurate context and perspective related to commonplace glitches or delays.

- Make an extra effort to reach new voters and new citizens, who may be less familiar with American elections. While many journalists acted on this advice in 2020 and 2022, even more of this outreach, as well as real-time debunking, will be necessary this year.

Federal and state policymakers should:

- Pass laws that will make it more difficult for bad actors to disrupt American elections through the spread of disinformation, with measures that would:

- Protect voters against the spread of malicious falsehoods about the time, place, and manner of elections.

- Ensure election offices have adequate funds to regularly communicate with and educate voters.

- Invest in critical physical and cyber safeguards for the protection of election workers and infrastructure.

At the state level, some of these reforms have passed in recent years, but not at nearly the pace they should have. In Congress, action to reform and strengthen democracy has gotten caught up in increased polarization and dysfunction. In the past, major events — such as hanging chads and disputed vote counts in the 2000 election or Russian cyberattacks on U.S. election infrastructure in the 2016 election — led the federal government to embrace major election-related reform. The events of January 6, 2021, have so far not resulted in similarly substantial changes designed to mitigate the harm caused by a poisonous information environment.

Before additional damage is done to the institution of elections, both the private sector and government must work harder and more seriously to ensure critical steps are taken to help safeguard American democracy from these falsehoods.